Gemini 3 Flash Outperforms Gemini 3 Pro in Coding Tests

Google's latest model is remarkably fast and cost-effective

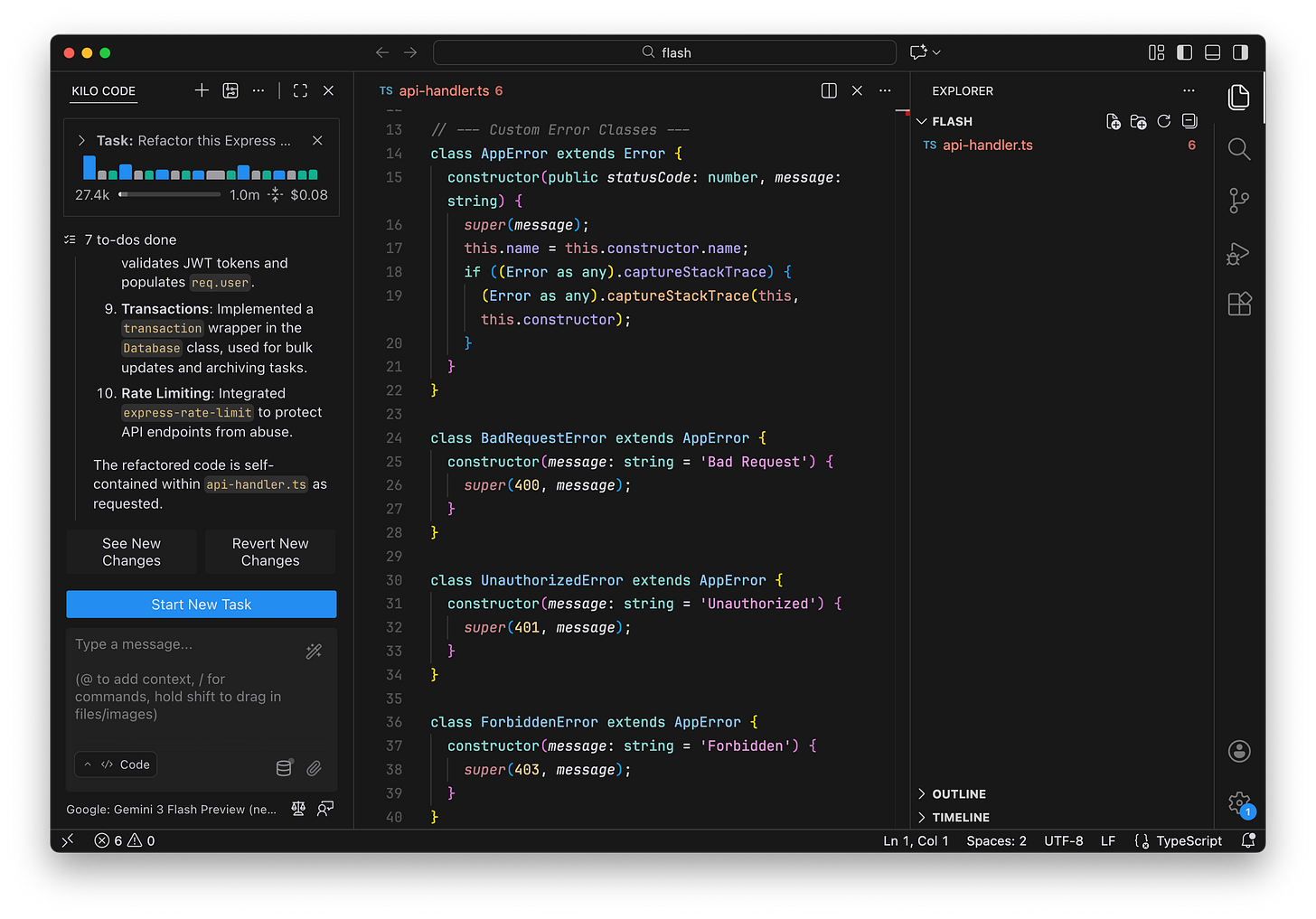

Gemini 3 Flash is now available in Kilo Code. Google released the model this week, positioning it differently from previous Flash variants. Instead of the usual “fast but less capable” tradeoff, Gemini 3 Flash delivers Pro-grade reasoning at Flash-level speed and cost. On SWE-bench Verified, it scored 78%, beating both Gemini 2.5 Pro and Gemini 3 Pro.

Within 24 hours of release, Gemini 3 Flash hit the top 20 on the Kilo leaderboard, outranking models several times its price. We ran it through the same three coding challenges we used in our comparisons of GPT-5.1, Gemini 3 Pro, and Claude Opus 4.5 and GPT-5.2/Pro.

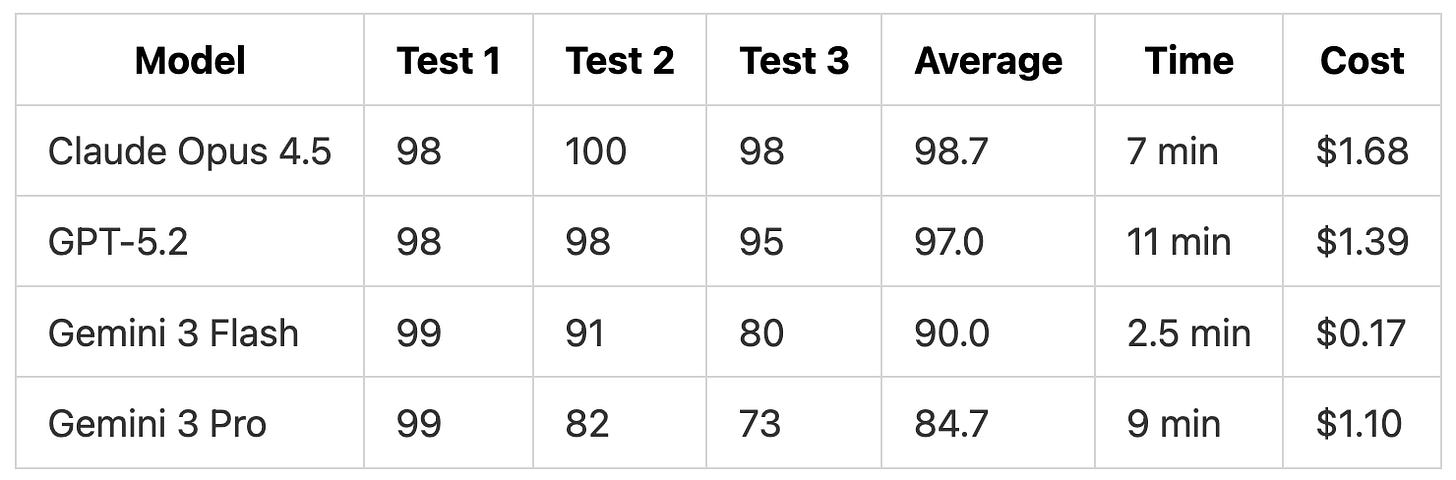

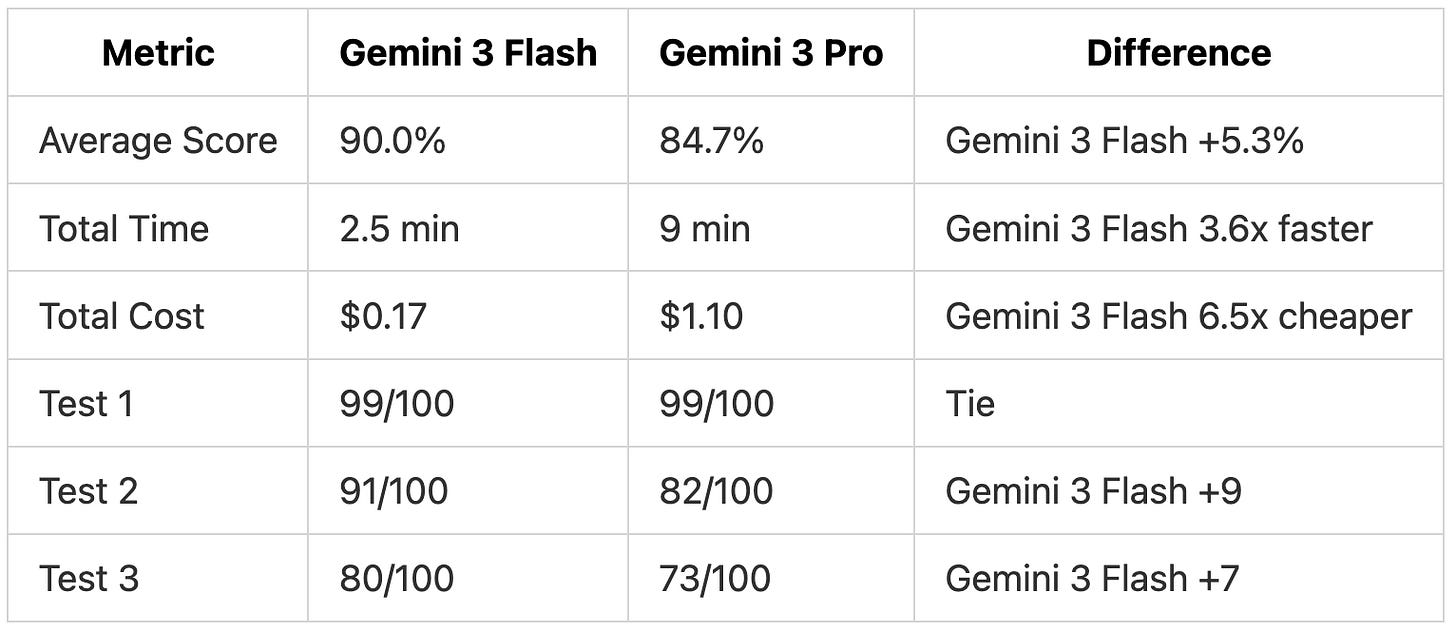

TL;DR: Gemini 3 Flash scored 90% average across three tests while costing $0.17 total. That’s 7 points higher than Gemini 3 Pro (84.7%), 6x cheaper, and 3x faster.

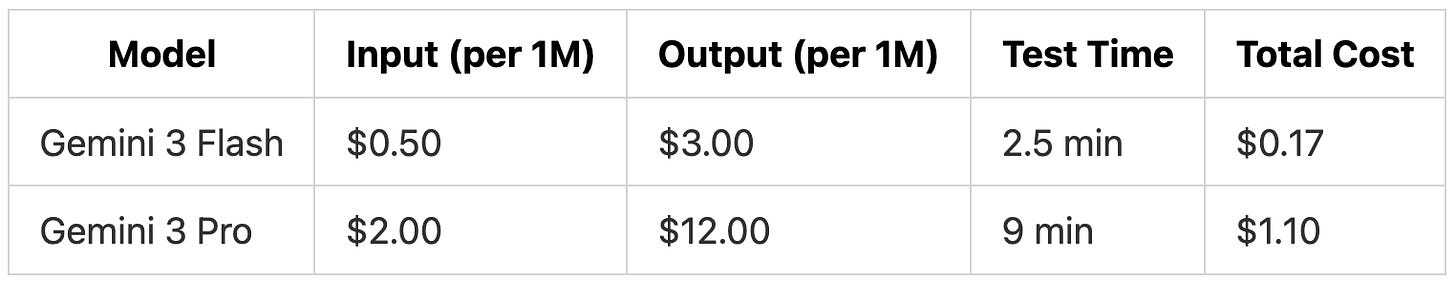

About the Models

Gemini 3 Flash is built for agentic coding workflows and responsive applications where speed matters. At 4x less than Pro pricing, it completed our three tests for $0.17 total compared to $1.10 for Gemini 3 Pro, finishing in 2.5 minutes versus 9 minutes.

How we tested the models

To test the latest model from Google, we used the same three tests from our previous comparisons of GPT-5.1, Gemini 3 Pro, and Claude Opus 4.5 and GPT-5.2/Pro:

Prompt Adherence Test: A Python rate limiter with 10 specific requirements (exact class name, method signatures, error message format)

Code Refactoring Test: A 365-line TypeScript API handler with SQL injection vulnerabilities, mixed naming conventions, and missing security features

System Extension Test: Analyze a notification system architecture, then add an email handler that matches existing patterns

Each test started from an empty project in Kilo Code. We used Code Mode for Tests 1 and 2, and Ask Mode followed by Code Mode for Test 3.

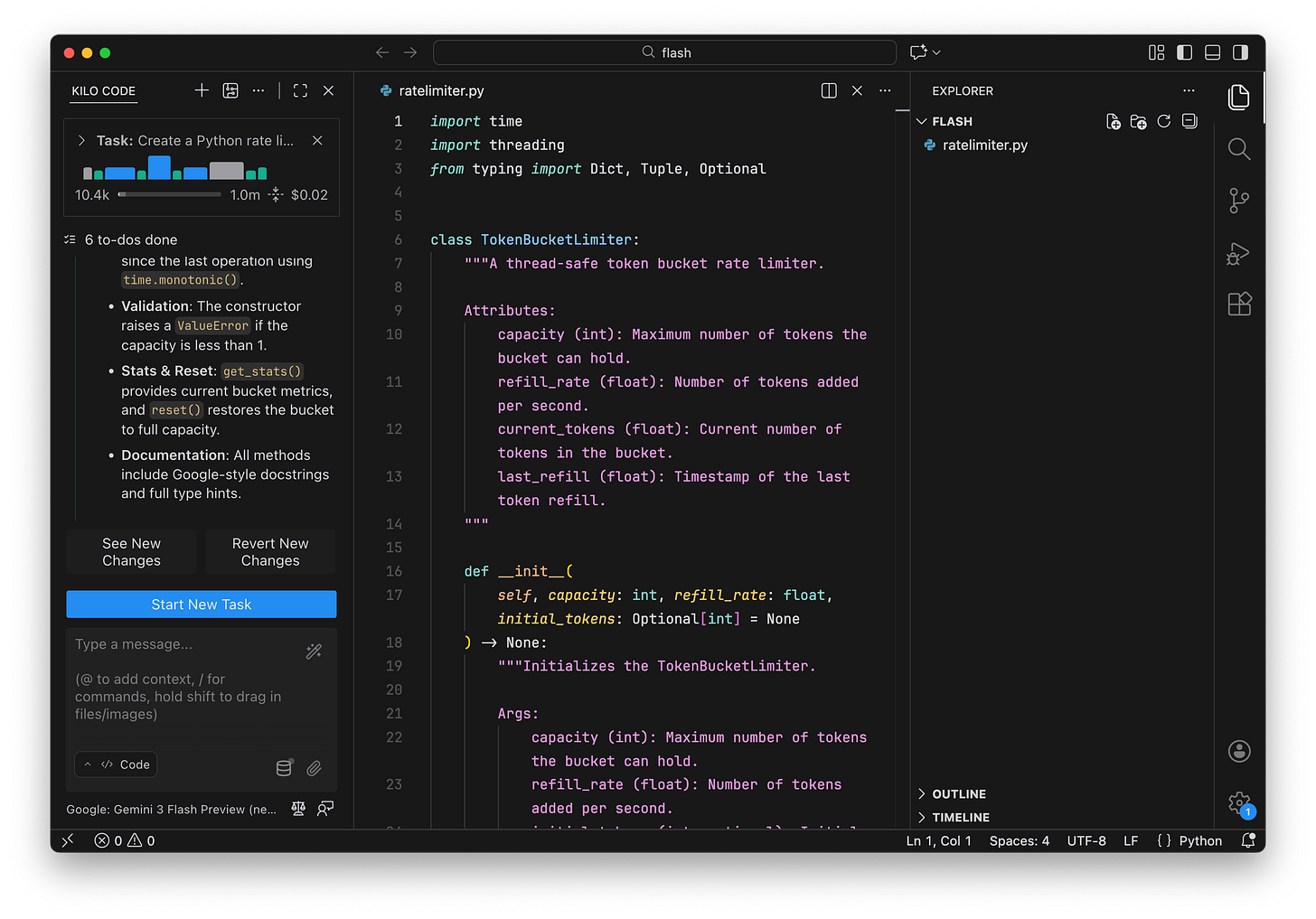

Test 1: Python Rate Limiter

We asked all models to implement a TokenBucketLimiter class with 10 specific requirements. These included the exact class name, specific method signatures, a particular error message format, and implementation details like using time.monotonic() and threading.Lock().

Results

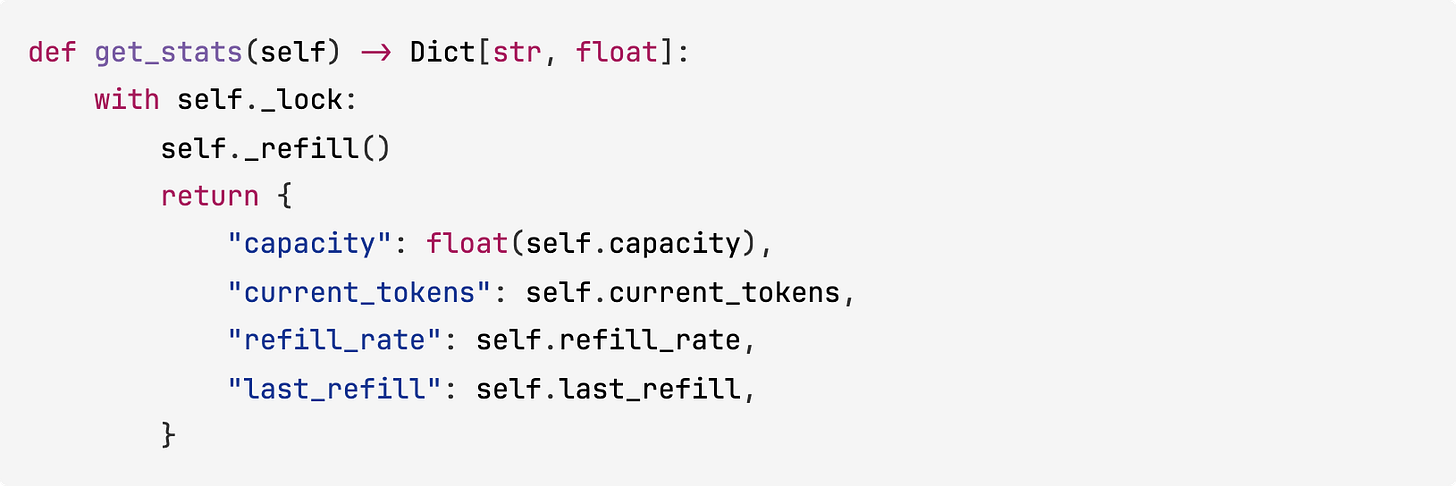

Gemini 3 Flash matched Gemini 3 Pro’s score while completing in half the time at a quarter of the cost. Both Gemini models followed the spec closely and produced the most concise implementations.

Gemini 3 Flash used public attributes (self.capacity, self.current_tokens) instead of private ones (self._capacity, self._current_tokens). This is a stylistic difference that doesn’t affect functionality:

The only point both Gemini models lost was for using Optional[int] instead of the exact int = None signature specified in the requirements. GPT-5.2 Pro was the only model to match that signature exactly, though we tested it out of curiosity since it’s not designed as a coding model. We’ve excluded GPT-5.2 Pro from the tables and comparisons in this post.

Test 2: TypeScript API Handler Refactoring

We provided a 365-line TypeScript API handler with 20+ SQL injection vulnerabilities, hardcoded secrets, no input validation, and missing features like rate limiting. The task was to refactor into clean layers and implement 10 specific requirements.

Results

Gemini 3 Flash scored 9 points higher than Gemini 3 Pro. The difference came down to two requirements: rate limiting and database transactions.

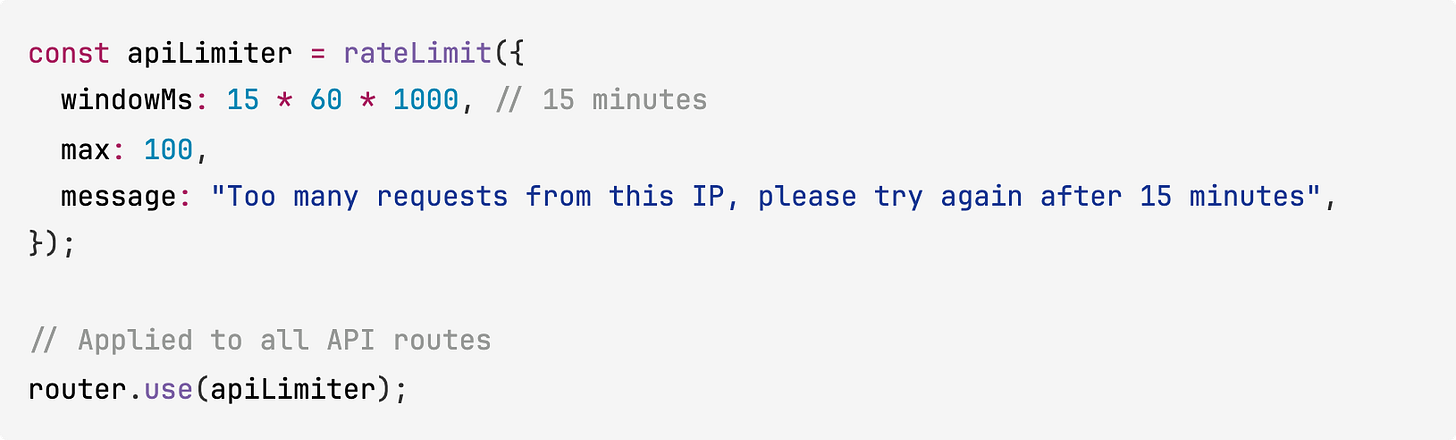

Rate Limiting

Gemini 3 Pro skipped this requirement entirely. Gemini 3 Flash used the express-rate-limit library:

This is simpler than the custom implementations from Claude Opus 4.5 and GPT-5.2, which include rate limit headers and periodic cleanup of expired entries. But Gemini 3 Flash meets the core requirement while Gemini 3 Pro produces nothing at all.

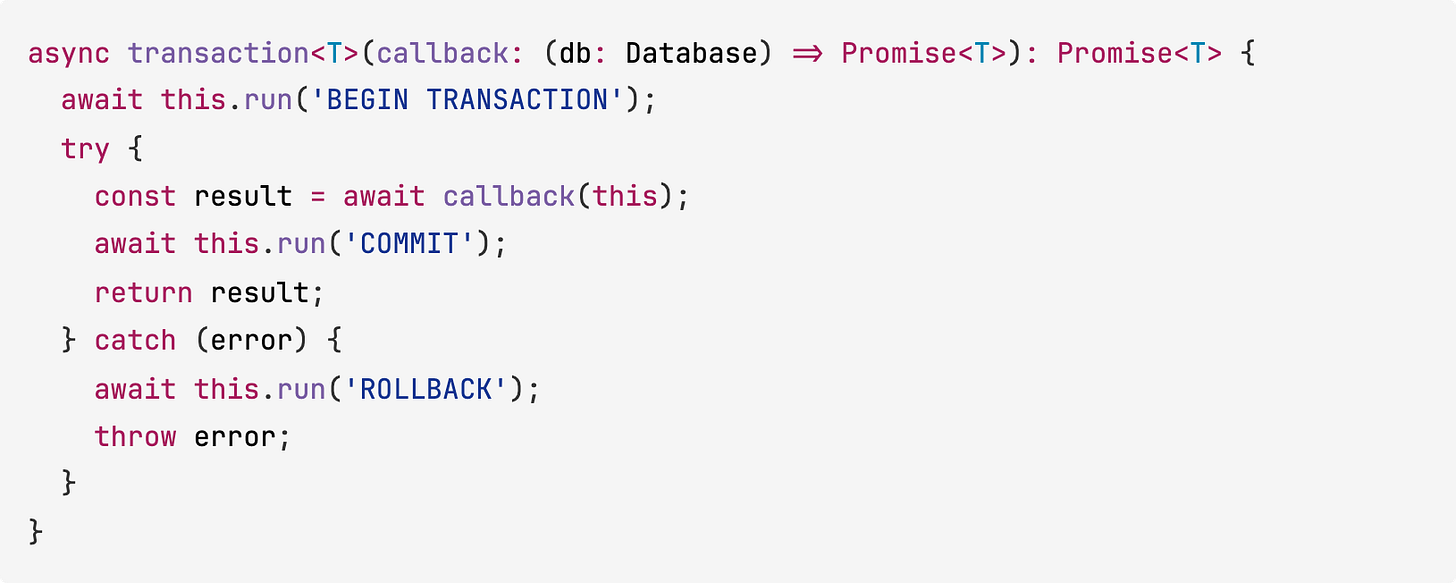

Database Transactions

Gemini 3 Flash implemented proper database transactions with BEGIN, COMMIT, and ROLLBACK:

Gemini 3 Pro left a comment acknowledging transactions were needed but didn’t implement them.

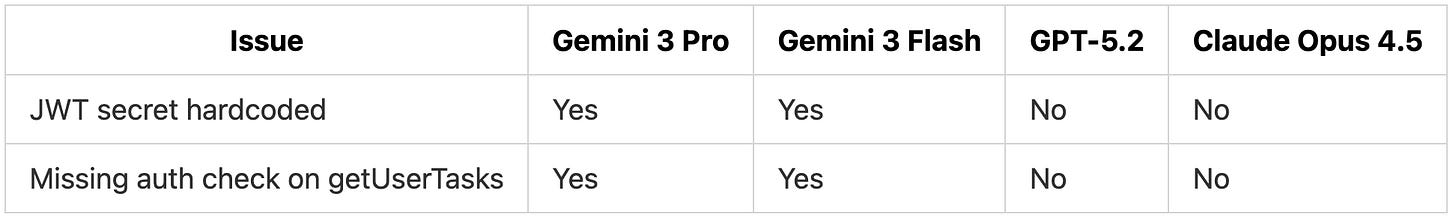

Shared Weaknesses

Both Gemini models share the same security gaps:

Both hardcode the JWT secret as 'hardcoded-secret-key-123' instead of using environment variables. Both allow any authenticated user to view any user’s tasks without authorization checks. GPT-5.2 and Claude Opus 4.5 fixed both issues.

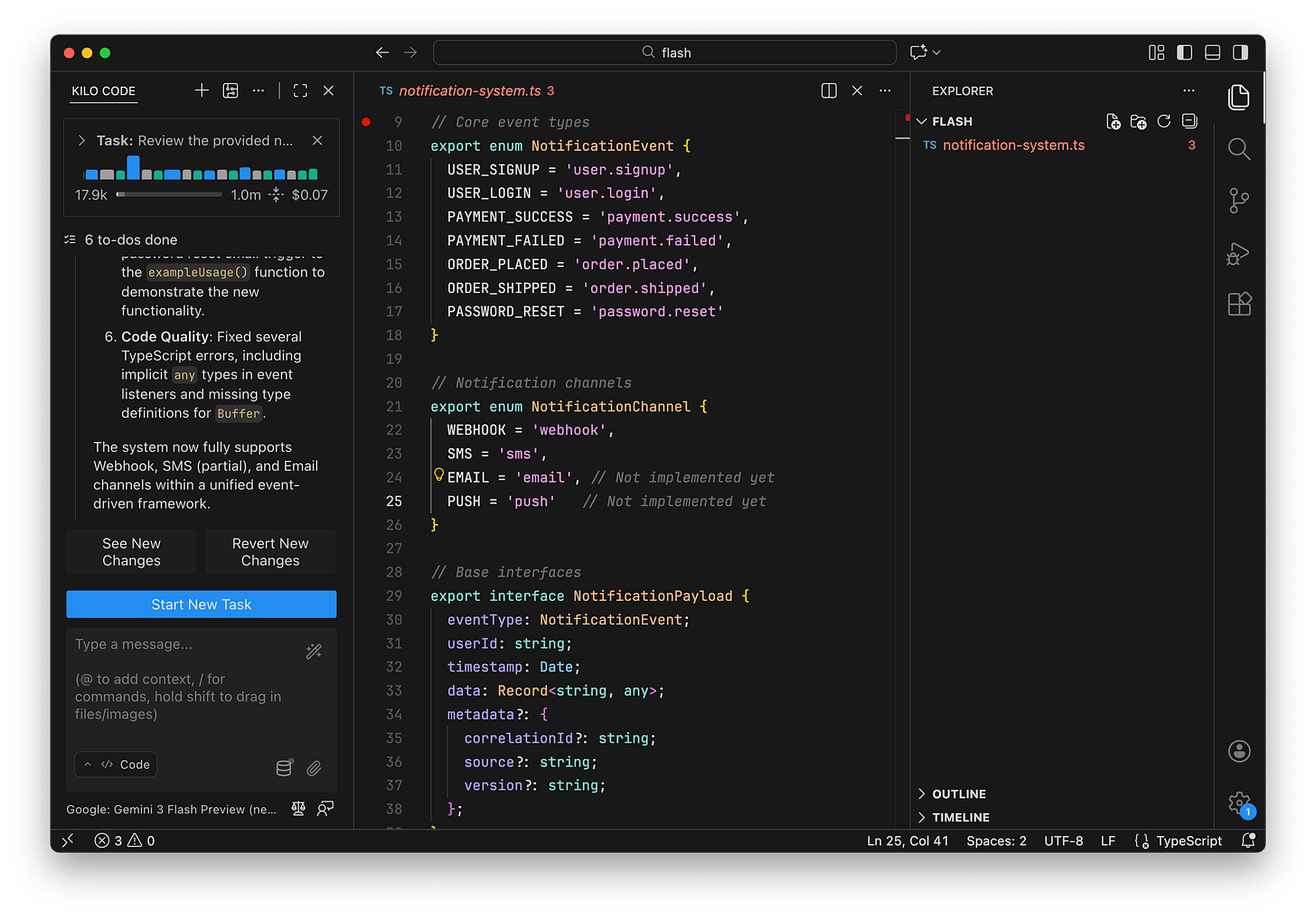

Test 3: Notification System Extension

We provided a 400-line notification system with Webhook and SMS handlers and asked models to explain the architecture using Ask Mode, then add an EmailHandler that matches existing patterns using Code Mode.

Results

Gemini 3 Flash scored 7 points higher than Gemini 3 Pro. Gemini 3 Flash produced a more detailed understanding (71 lines vs 51 lines) and included a mermaid flowchart diagram that Gemini 3 Pro lacked.

Gemini 3 Flash’s Better Understanding

Gemini 3 Flash identified the same architectural issues as more expensive models, including the fragile channel detection using type casting, private property access violations, missing validation during handler registration, and queue bottlenecks.

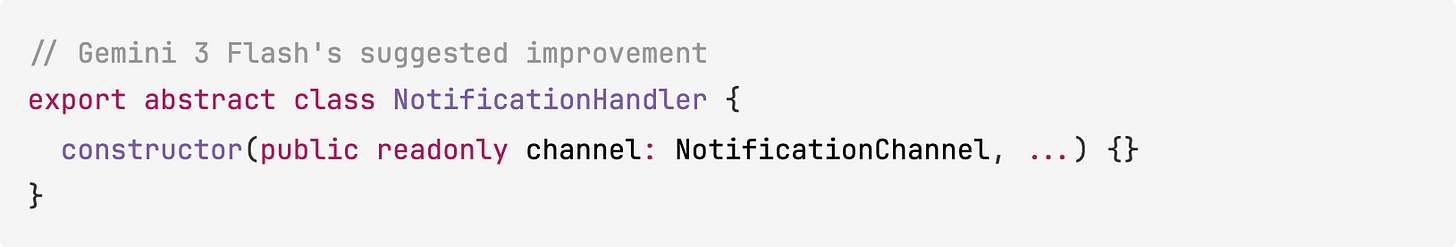

Gemini 3 Flash also suggested concrete fixes:

Gemini 3 Pro identified fewer issues and provided no code suggestions.

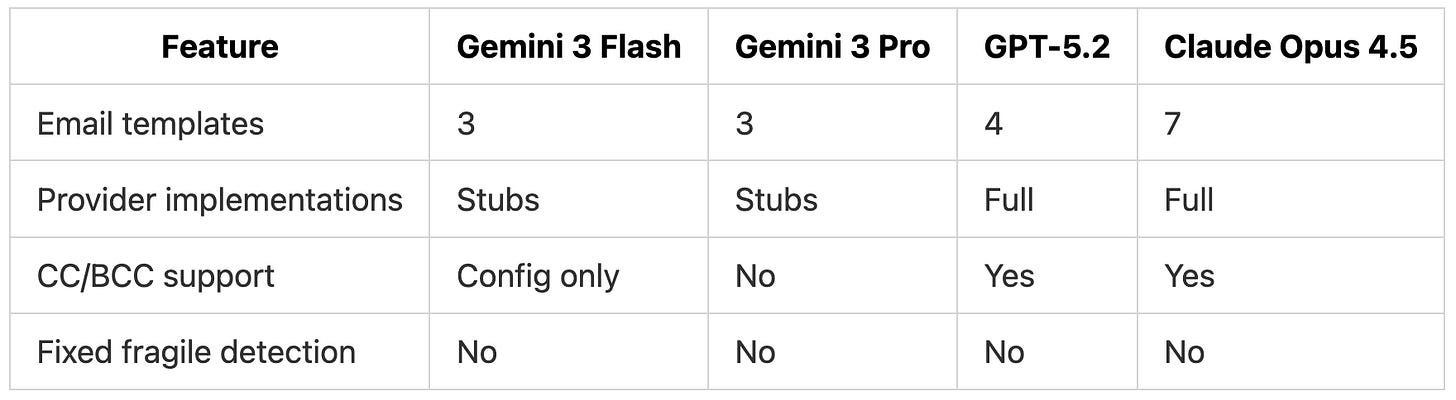

Implementation Gap

Both Gemini models trail behind GPT-5.2 and Claude Opus 4.5 on implementation quality:

Gemini 3 Flash defined CC/BCC in its EmailConfig interface but didn’t use them in the send implementation. Both Gemini models left provider implementations as console.log placeholders rather than actual API calls to SMTP, SendGrid, or AWS SES.

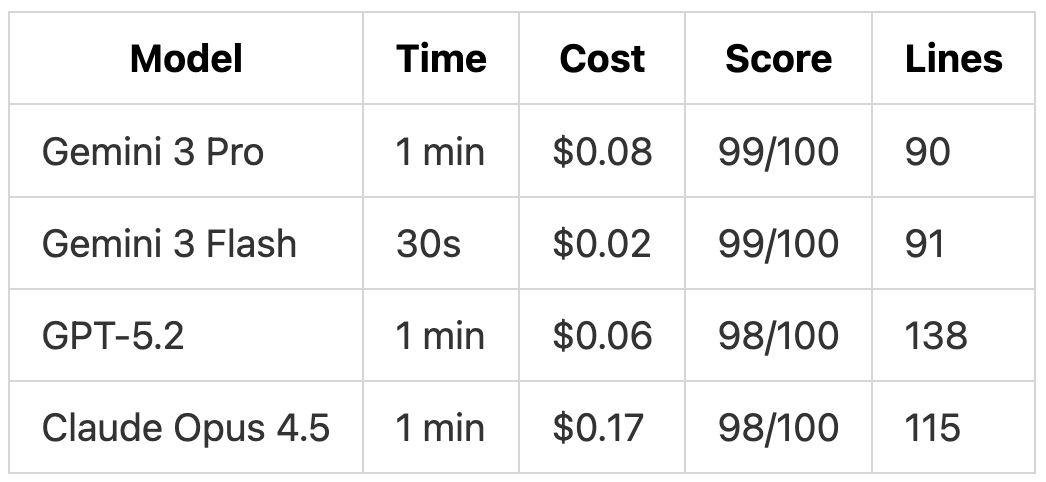

Performance Summary

Overall Scores

Gemini 3 Flash vs Gemini 3 Pro

The budget model outperformed the flagship on every test. Gemini 3 Flash’s advantage came from implementing requirements that Gemini 3 Pro missed (rate limiting, transactions) rather than from architectural improvements.

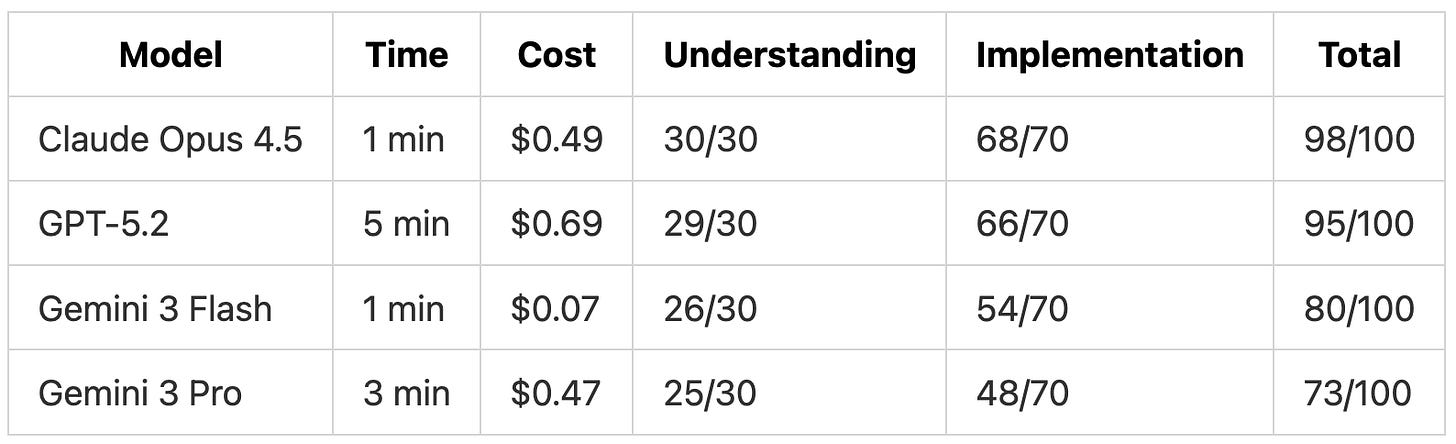

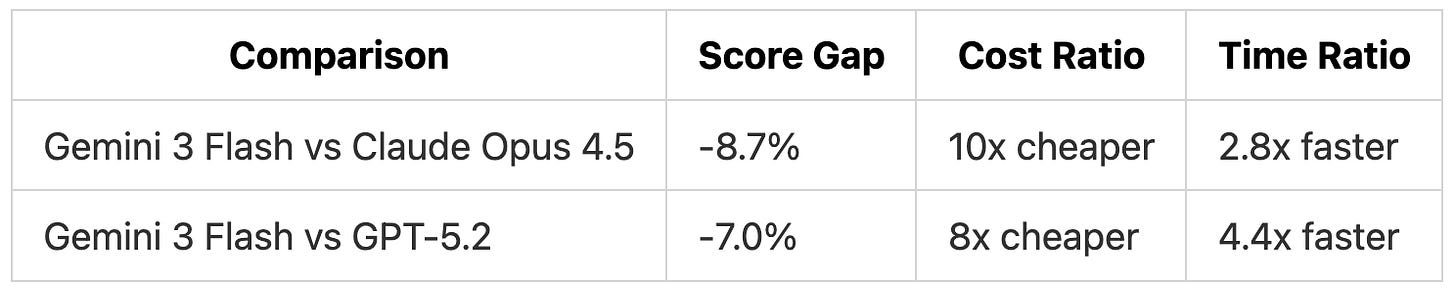

Gemini 3 Flash vs Frontier Models

Gemini 3 Flash trails the leading models by 7-9 points:

For $0.17 and 2.5 minutes, Gemini 3 Flash delivers 90% of the functionality. The missing 7-10% includes actual API implementations for email providers instead of stubs, complete email features like CC/BCC in the send logic and all 7 event templates, environment variable handling for secrets, and authorization checks on user-scoped endpoints.

Gemini 3 Flash as an Implementation Model

We’ve been exploring workflows where you plan with one model and implement with another. The idea is to use a more capable model for architecture and design decisions, then switch to a faster model for writing the actual code. Gemini 3 Flash fits this pattern well.

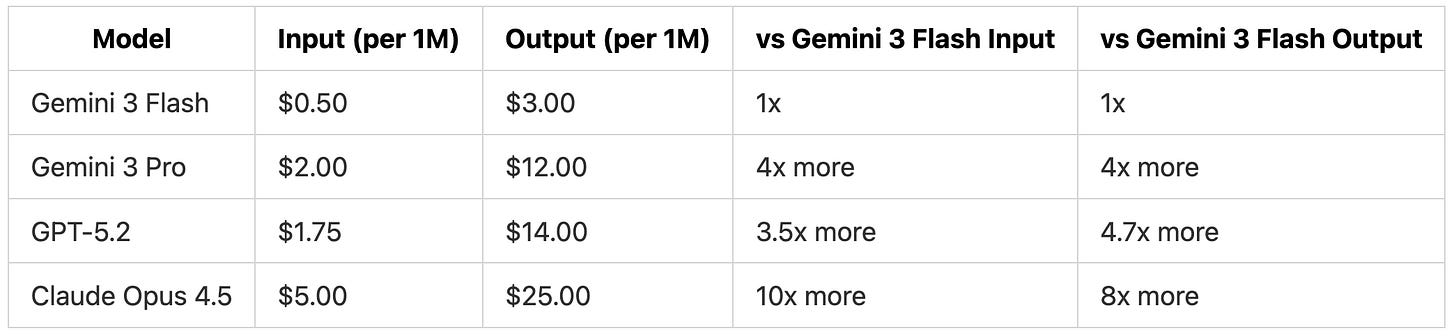

The Speed and Cost Advantage

Here’s how Gemini 3 Flash compares on pricing:

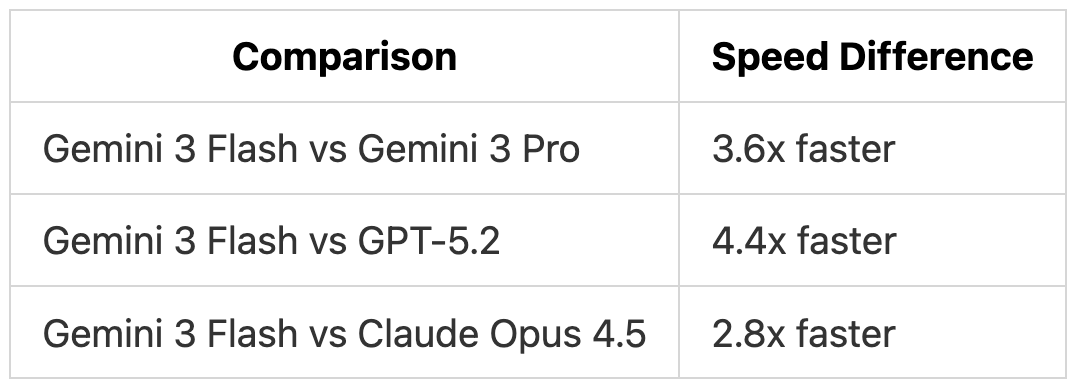

In our tests, Gemini 3 Flash was also faster in practice:

Why This Matters for Plan-Then-Implement

Gemini 3 Flash scored 90% on our tests, which is 7-9 points behind the frontier models. But those missing points were mostly in areas that planning handles: security considerations like environment variables, authorization checks, and complete feature coverage.

If you use Claude Opus 4.5 or GPT-5.2 to plan the architecture and specify what needs to be built, Gemini 3 Flash can execute that plan quickly and cheaply. The planning model catches the security concerns and design decisions. Gemini 3 Flash handles the implementation.

This workflow gives you the thoroughness of expensive models where it matters (design) and the speed of Gemini 3 Flash where volume matters (writing code).

Verdict

Gemini 3 Flash is the fastest and cheapest model we’ve tested on this benchmark, completing all three tests in 2.5 minutes for $0.17 total. It scored 90% average compared to Gemini 3 Pro’s 84.7%, which is unexpected for a budget model. The difference came from Gemini 3 Flash implementing requirements that Pro missed: rate limiting, database transactions, and more detailed documentation with mermaid diagrams.

The score gap held across all three challenges on our tests, though other benchmarks or task types may show different results. For developers choosing between Gemini models, Gemini 3 Flash is worth testing first given the 6x cost savings and 3.6x speed advantage.

For complete implementations that handle security concerns like environment variables and authorization checks, GPT-5.2 and Claude Opus 4.5 remain the better choices on our benchmark. They scored 7-9 points higher and implemented features fully rather than leaving stubs. Alternatively, you can use one of those models in Architect Mode for planning, then switch to Code Mode with Gemini 3 Flash for implementation.

Current Standings

Gemini 3 Flash carves out a new position as the budget option that doesn’t sacrifice quality for cost. At $0.17 per run, you could run Gemini 3 Flash 10 times for the cost of one Claude Opus 4.5 run. For iterative development where you’ll refine the output anyway, that math works in Gemini 3 Flash’s favor.

Testing performed using Kilo Code, a free open-source AI coding assistant for VS Code and JetBrains with 750,000+ installs.

agreed. Gemini 3 flash is performing way better.

yeah. there was an engineer talking about this recently. and the good news is it’s signaling the new tech that is making flash so good will be dropped in pro soon. google is killing it!