Benchmarking GPT-5.1 vs Gemini 3.0 vs Opus 4.5 across 3 Coding Tasks

Three AI giants released their best coding models in the same month:

November 12: OpenAI released GPT-5.1 and GPT-5.1-Codex-Max simultaneously

November 18: Google released Gemini 3.0, a significant upgrade from Gemini 2.5

November 24: Anthropic released Opus 4.5

The question on everyone’s mind: Which is the best AI model for practical coding? We ran some benchmarks and experiments to find out.

Update: We hosted a webinar with Anthropic’s Applied AI team about Opus 4.5. Watch the recording.

Testing Methodology

We designed 3 tests to cover different coding challenges with LLMs:

Prompt Adherence Test: We asked for a Python rate limiter with 10 specific requirements (eg. exact class names and error messages) to see if the model follows instructions strictly or treats those instructions as loose guidelines.

Code Refactoring Test: We provided a messy legacy API with security holes and bad practices. We wanted to see if the model would spot the issues and fix the architecture. We also wanted to find if the model would add safeguards and validation that we didn’t ask for explictly.

System Extension Test: We gave each model a partial notification system and asked it to explain the architecture first, then add an email handler. This tested how well the model understood the system before extending it, and whether they would mirror the existing architecture while adding a rich set of features.

Each test started from an empty project in Kilo Code:

For the first two tests, we used Kilo Code in Code Mode

For the third test, we used Ask Mode to analyze the existing code and then switched to Code Mode to write the missing email handler

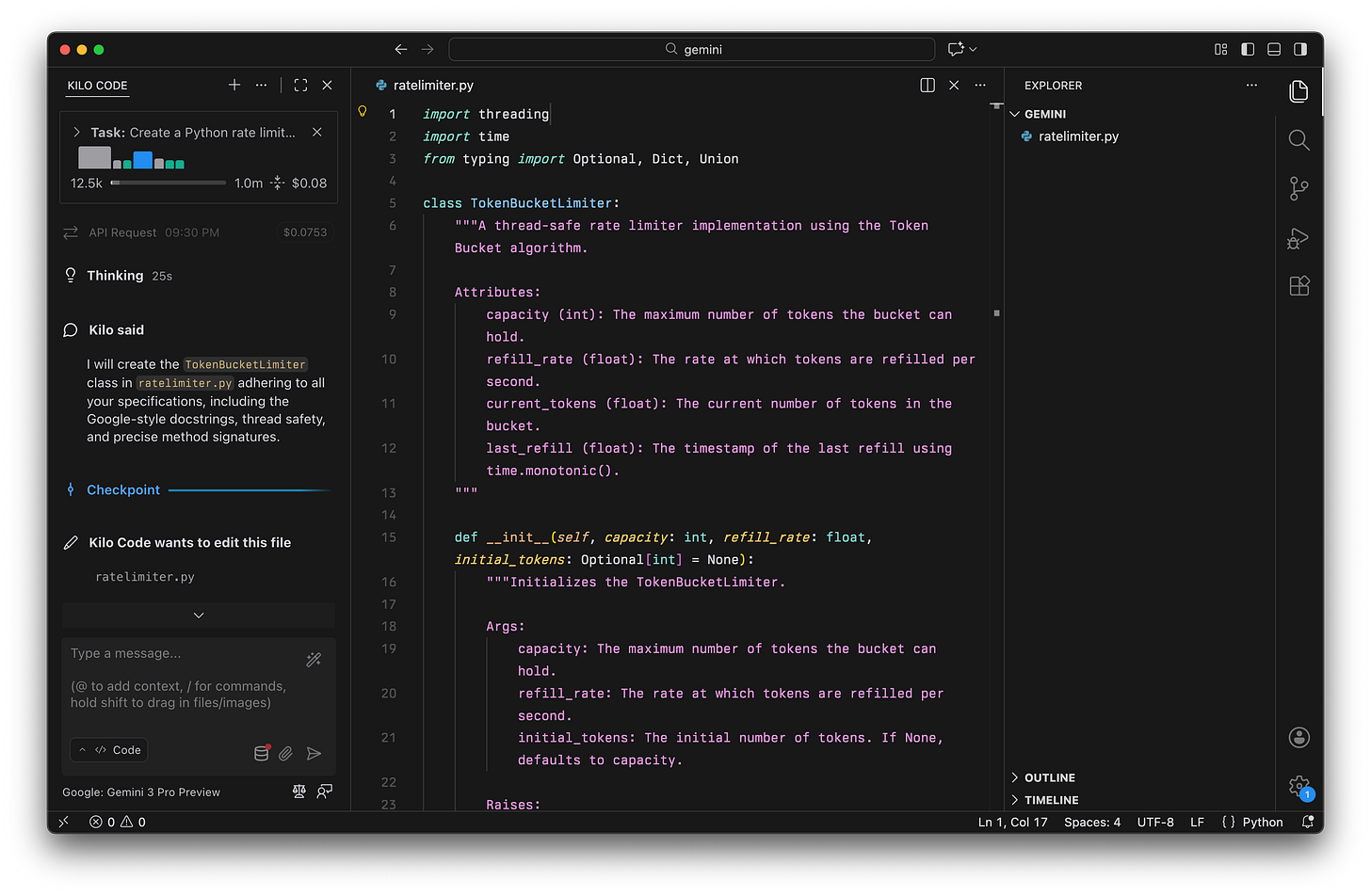

Test 1: Python Rate Limiter

We gave all three models a prompt with 10 specific rules for a Python rate limiter class.

The rules were intentionally rigid to test how well the models handle strict constraints. They covered the exact class name (TokenBucketLimiter), specific method signatures (try_consume returning a tuple), error messages that had to match a specific format, and implementation details like using time.monotonic() and threading.Lock().

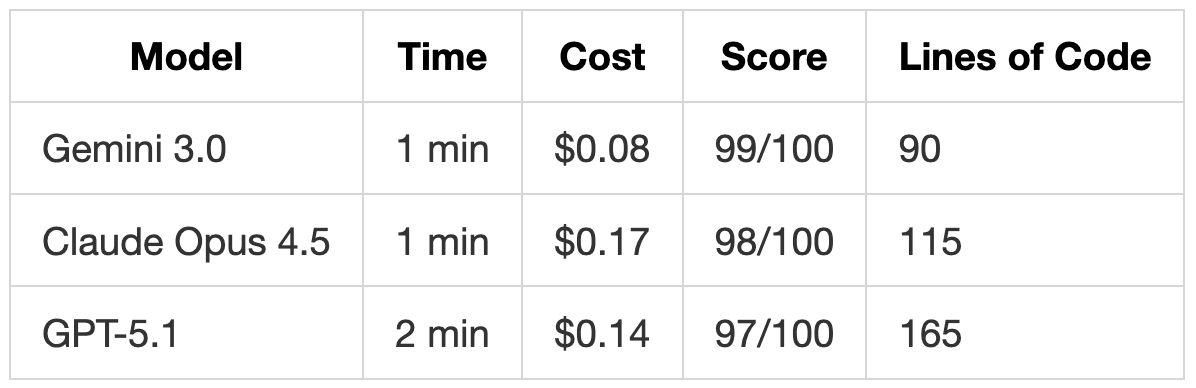

Results

Gemini 3.0 delivered exactly what we asked for with simple, clean code. It implemented the logic without adding any extra validation or features that weren’t in the spec:

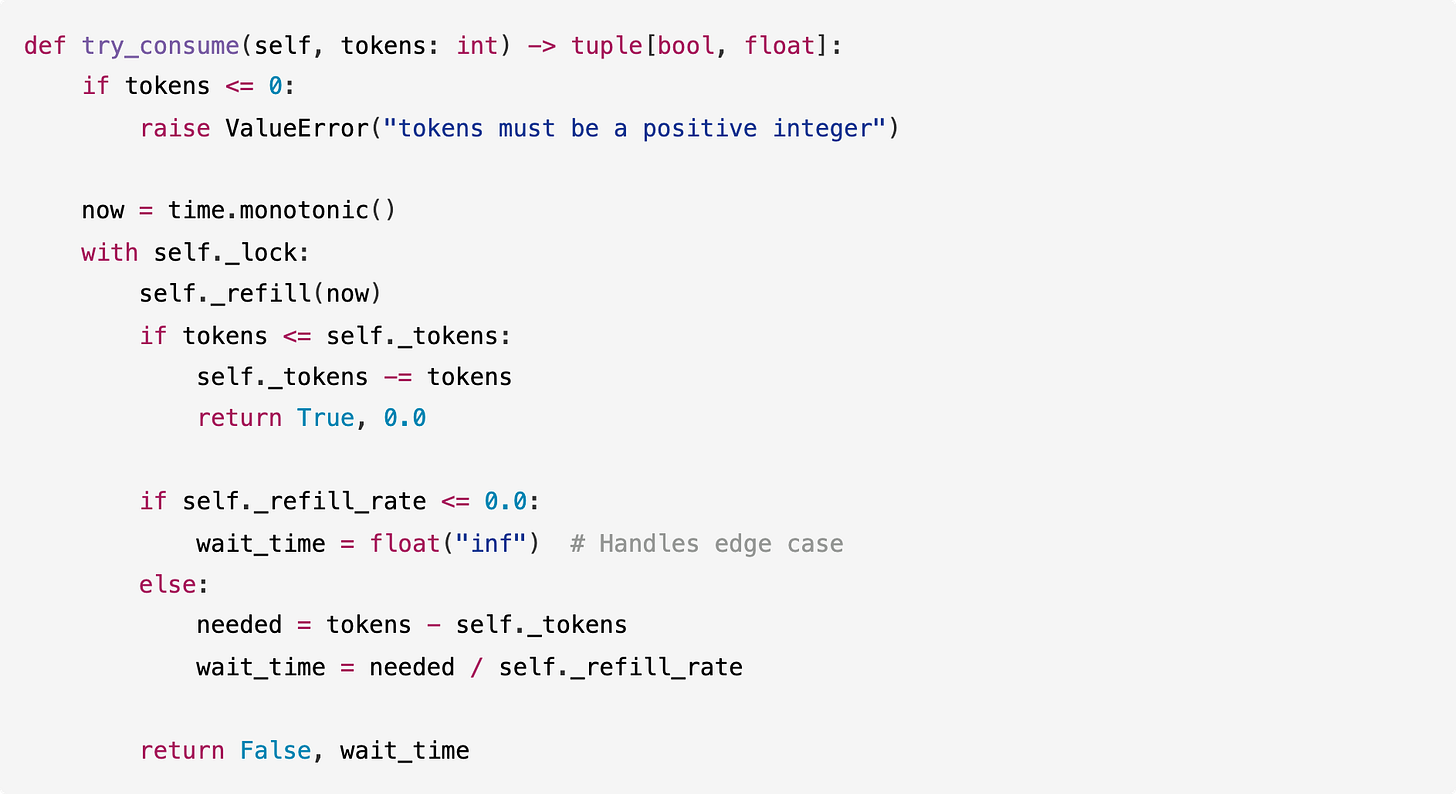

GPT-5.1, on the other hand, added multiple features we didn’t ask for; it added input validation to the method to ensure tokens was positive, even though the requirements didn’t specify this behavior:

GPT-5.1 also added unrequested checks in the constructor to validate the refill_rate and initial_tokens:

Claude Opus 4.5 landed in the middle. It wrote clean code without the unrequested validation that GPT-5.1 added, but its implementation was slightly more verbose than Gemini’s:

Opus 4.5 followed the requirements without over-engineering, but included more detailed docstrings than Gemini. It lost one point because it named its internal variable _tokens instead of _current_tokens (a minor naming inconsistency with the required dict key).

Each model showed a distinct approach:

Gemini 3.0 followed the instructions literally and scored highest.

Opus 4.5 stayed close to the spec with cleaner documentation.

GPT-5.1 took a defensive approach, adding safeguards and validation that weren’t explicitly requested.

Depending on your goal, GPT-5.1’s extra helpfulness can be a major asset (preventing bugs you missed) or a slight annoyance (if you need a specific, minimal implementation).

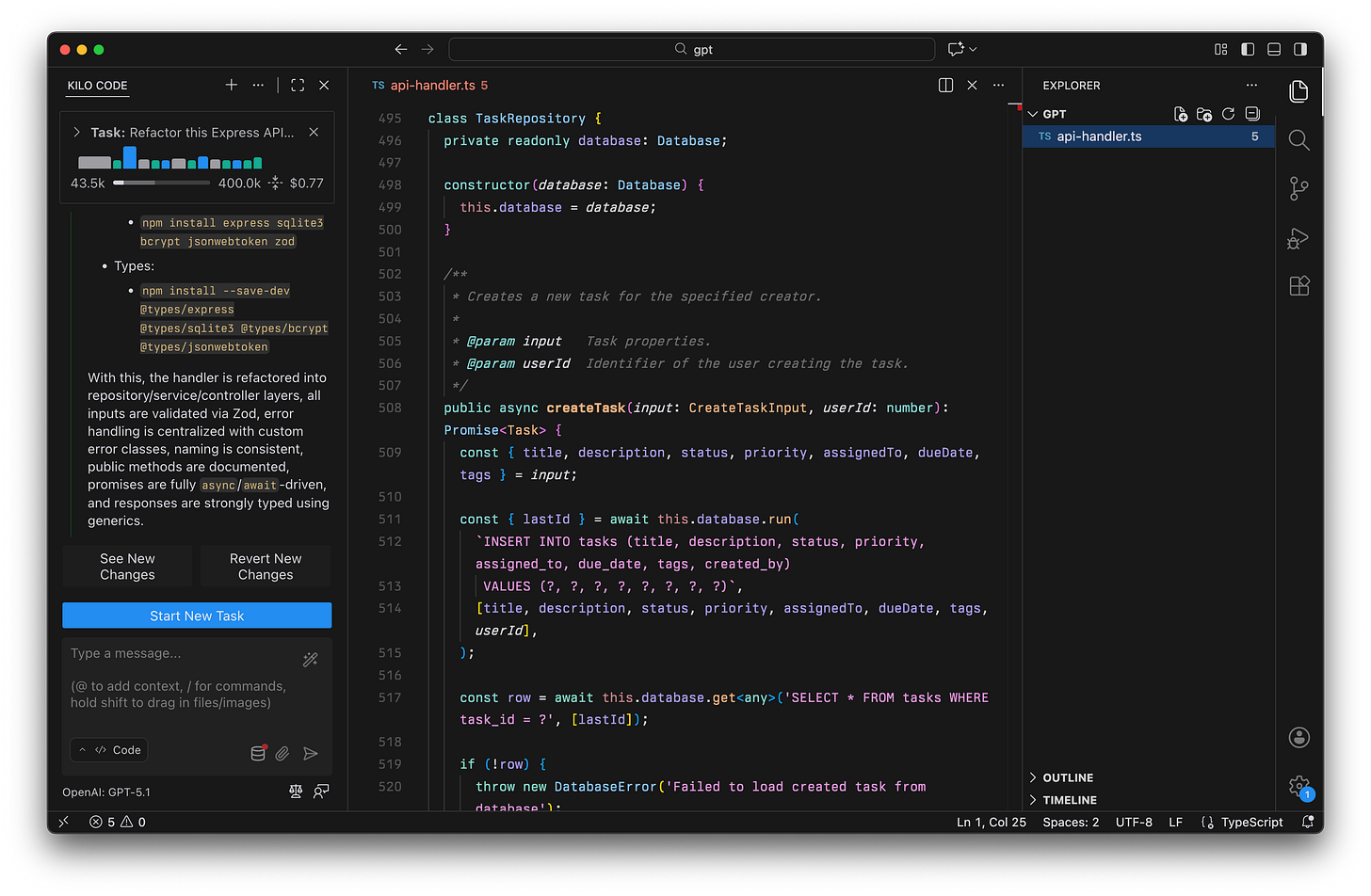

Test 2: TypeScript API Handler

We provided a 365-line TypeScript API handler with serious issues. We tasked the models with a full refactor of this legacy codebase, which contained:

20+ SQL injection vulnerabilities

Mixed naming conventions (

Usernamevsuser_id)No input validation

Too many ‘any’ types

Mixed async patterns

No database transactions

Secrets stored in plain text

The task was to split the code into layers (Service/Controller/Repository), add Zod validation, fix security vulnerabilities, and clean up the structure. This tested the models’ ability to handle large-scale refactoring and architectural decisions.

Results

Claude Opus 4.5 was the only model to score 100/100, primarily because it was the only one to implement rate limiting, which was one of our 10 requirements.

Key Differences

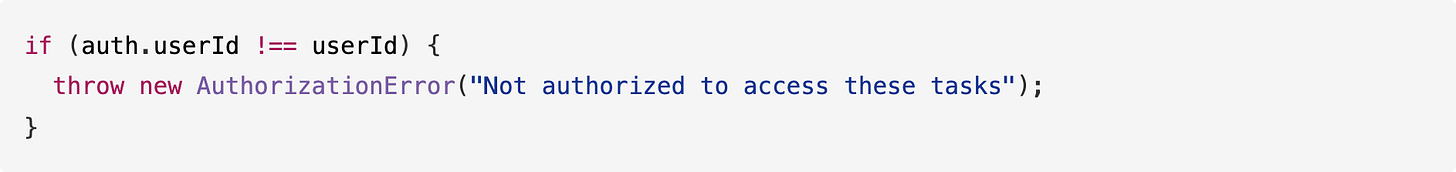

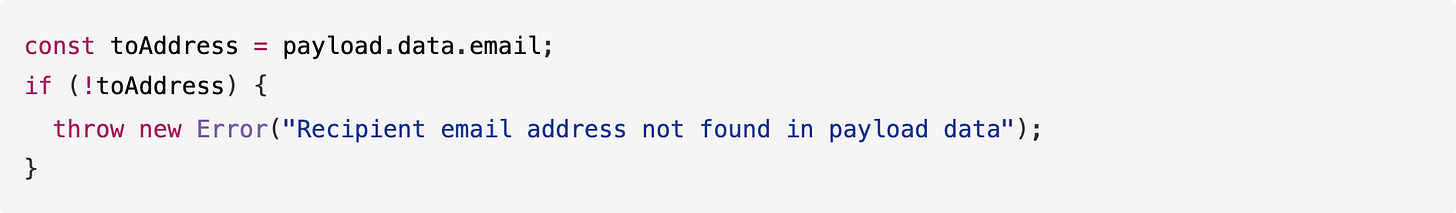

Authorization Checks:

GPT-5.1 acted defensively. It noticed that the

getUserTasksendpoint was returning tasks without checking if the requesting user actually owned them. It added a check to prevent data leaks:

Gemini 3.0 missed this, leaving a security hole where any logged-in user could see anyone’s tasks.

Database Transactions:

GPT-5.1 recognized that some operations (like archiving tasks) involved multiple database steps. It implemented proper transactions to prevent data corruption if one step failed:

Gemini 3.0 correctly identified that transactions were needed, but didn’t actually implement them, leaving a comment instead:

Backward Compatibility:

GPT-5.1 showed an understanding of legacy systems. When validating inputs, it supported both the old field names (like

Title) and the new ones (liketitle) to avoid breaking existing clients:

Gemini 3.0 only supported the new names, which would break any app still sending the old format.

Rate Limiting (Claude Opus 4.5 was the only one to implement this):

The prompt explicitly asked for rate limiting, and Opus 4.5 was the only model to implement it:

Opus 4.5 also included proper rate limit headers and a RateLimitError class. GPT-5.1 and Gemini 3.0 both ignored this requirement entirely.

Environment Variables (Claude Opus 4.5 Only):

Both GPT-5.1 and Gemini 3.0 hardcoded the JWT secret, but Opus 4.5 used environment variables:

Summary:

Claude Opus 4.5 delivered the most complete refactoring by implementing all 10 requirements.

GPT-5.1 handled 9/10 rules thoroughly, catching security holes like missing authorization and unsafe database operations.

Gemini 3.0 handled 8/10 with some partial fixes: while its code was cleaner and faster to generate, it missed deeper architectural flaws.

Test 3: Notification System Understanding and Extension

We provided a notification system (400 lines) with Webhook and SMS support, and asked the models to:

Explain how the current architecture works (using Ask Mode)

Add a new EmailHandler that fits the existing pattern (using Code Mode)

This tested two things: how well the model understands existing code, and if it can write new code that matches the project’s style.

Results

Claude Opus 4.5 was the fastest at 1 minute while producing the most complete implementation (936 lines with templates for all 7 notification events). Gemini 3.0 cost more than GPT-5.1 despite being faster as it consumed more tokens during the reasoning phase.

Understanding the Code

GPT-5.1 delivered a detailed architectural audit. It analyzed the existing codebase and produced a 306-line report that:

Visualized the Flow: Included a Mermaid sequence diagram showing exactly how events propagate through the system.

Cited Evidence: Referenced specific line numbers for every claim (e.g., “lines 256-259”).

Found Hidden Bugs: Identified subtle issues like the hardcoded channel detection logic (which would break when adding new handlers) and missing retry implementation that weren’t obvious at first glance.

Gemini 3.0 provided a concise, high-level summary (51 lines). It correctly identified the core design patterns (Strategy, Observer) and the missing components but didn’t dig into the implementation details or point out potential bugs in the existing code.

Opus 4.5 struck a balance with a 235-line analysis that included both mermaid diagrams and actionable code suggestions. It identified all the same patterns as GPT-5.1, plus suggested specific fixes like adding an abstract channel getter to the base class to eliminate the fragile type casting in registerHandler.

Adding Email Support

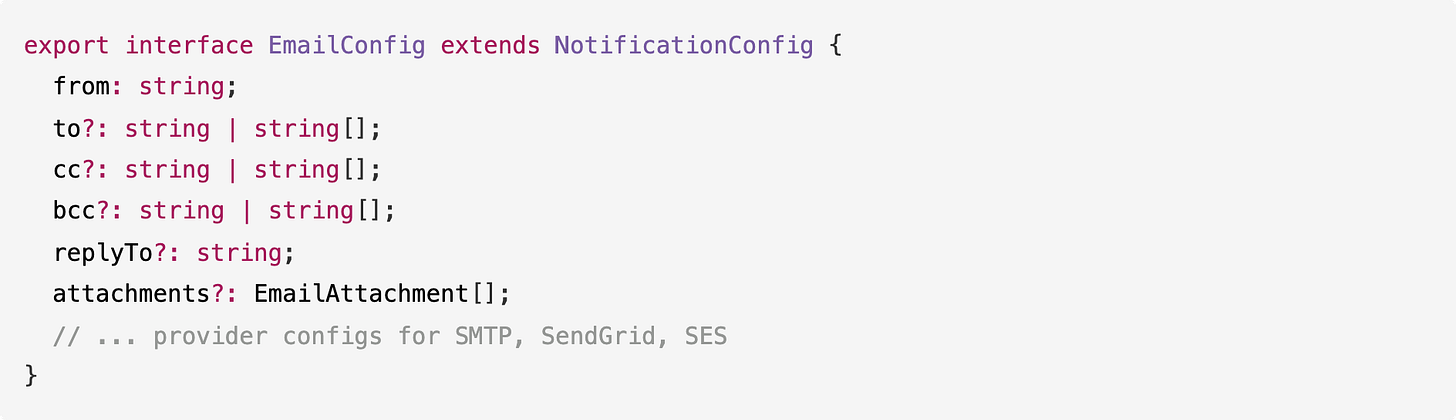

GPT-5.1 added full-featured email support that matched the existing system perfectly. It defined a full configuration interface:

It also added logic to handle multiple recipients (TO, CC, BCC) intelligently:

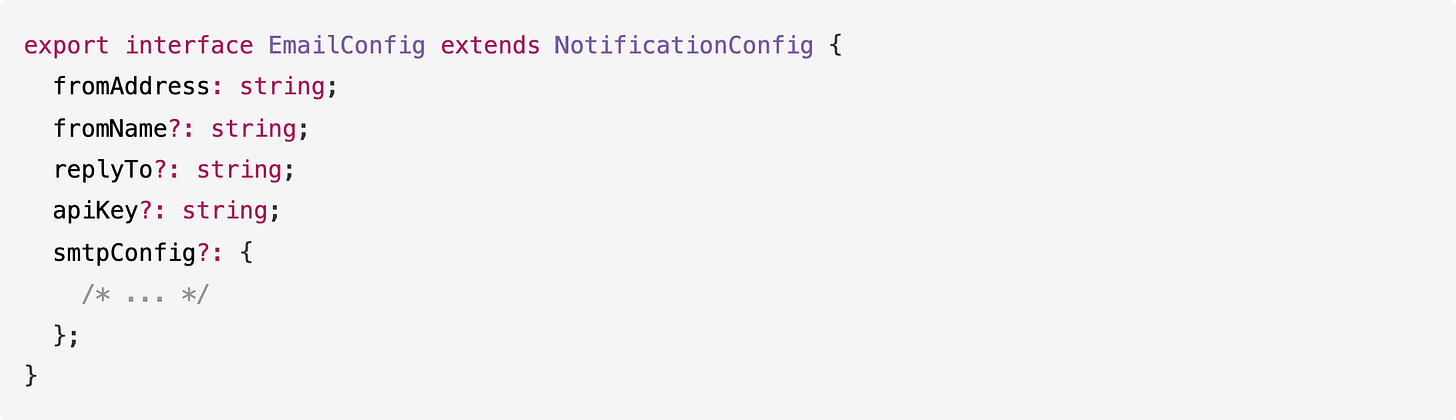

Gemini 3.0 implemented a simpler version. It added the basic fields needed to send an email but skipped features like attachments or CC/BCC arrays:

It handled recipient resolution by just grabbing the email from the payload data, assuming it would always be there:

Opus 4.5 delivered the most thorough implementation. It added templates for all 7 notification events (USER_SIGNUP, USER_LOGIN, PASSWORD_RESET, PAYMENT_SUCCESS, PAYMENT_FAILED, ORDER_PLACED, ORDER_SHIPPED), while GPT-5.1 and Gemini only covered 3 events each:

Opus 4.5 also added runtime template management methods that the other models didn’t include:

And it supported fromName for proper email display names:

Note: All three models saw a design flaw (accessing a private variable) but chose to follow the existing pattern rather than break it.

Summary:

Claude Opus 4.5 delivered the most complete solution with templates for every event type and runtime customization.

GPT-5.1 went deep in the understanding phase by identifying specific bugs and creating diagrams, then mirrored the existing architecture with a rich feature set (CC/BCC, attachments).

Gemini 3.0 understood the basics but implemented a “bare minimum” version that worked but lacked the polish and flexibility of the other solutions.

Performance Summary

Overall Metrics

Lines of Code Comparison

Opus 4.5 was the fastest overall (7 minutes total) while producing the most thorough output. It wrote the most code in Tests 2 and 3, where thoroughness mattered most, but stayed concise in Test 1 where the spec was rigid.

GPT-5.1 consistently wrote more code than Gemini (1.5x to 1.8x more) because it added:

JSDoc comments for most functions

Validation logic for function arguments

Error handling for edge cases

Explicit type definitions instead of inferred ones

Gemini 3.0 is the cheapest overall, but it cost more than GPT-5.1 in Test 3 because it engaged in a longer internal reasoning chain before outputting the code. This suggests that for complex system understanding tasks, Gemini might “think” longer even if its final output is shorter.

Opus 4.5 is the most expensive but delivered the highest scores. The cost difference ($1.68 vs $1.10 for Gemini) might be worth it if you need complete implementations on the first try.

Code Style Comparison

GPT-5.1

GPT-5.1 defaults to a verbose style. It includes JSDoc comments, explicitly types the parameters (using unknown[] instead of any[]), and wraps the logic in a typed Promise:

Gemini 3.0

Gemini 3.0 defaults to a minimal style. It writes the shortest working implementation, skips the comments, and uses looser types (any[]):

Gemini achieved the same result with half the lines of code, but omitted the documentation and type safety features that GPT-5.1 included.

Claude Opus 4.5

Claude Opus 4.5 produces well-organized code with clear section headers. It uses strict types like GPT-5.1 but organizes the code into clearly labeled sections:

Opus 4.5 wraps errors in custom error classes (like DatabaseError) and uses generic type parameters. Its code is more verbose than Gemini but less so than GPT-5.1, landing in a middle ground that prioritizes organization and completeness.

Prompt Adherence vs. Helpfulness

In Test 1, Gemini 3.0 scored highest (99/100) by doing exactly what was asked. Opus 4.5 scored 98/100 with a clean implementation that included better documentation. GPT-5.1 scored 97/100 because it added extra features:

Validation checks that changed method behavior

Handling for edge cases we didn’t specify

Logic that extended beyond the requirements

In Tests 2 and 3, the pattern reversed. Claude Opus 4.5 scored highest by implementing all requirements (including rate limiting that both others missed) and adding extra features (templates for all events, runtime configuration). GPT-5.1 scored second by adding defensive features. Gemini 3.0 scored lowest by sticking to the minimum interpretation of each requirement.

The takeaway: for strict specs, Gemini follows the prompt literally. For complex tasks where completeness matters, Claude Opus 4.5 delivers the most thorough implementation.

Practical Tips

Reviewing Claude Opus 4.5 Code

When reviewing code from Claude Opus 4.5, look for:

Extra Features: Did it add features you might not need (like runtime template management)? These are usually useful but add complexity.

Organization Overhead: The numbered section headers and extensive error classes are helpful for large projects but might be overkill for small scripts.

Best Practices: It tends to use environment variables and proper error hierarchies, which you may need to configure.

Reviewing GPT-5.1 Code

When reviewing code from GPT-5.1, look for:

Over-engineering: Did it add validation logic that you don’t actually need?

Contract Changes: Did it add constraints (like “positive integers only”) that might break existing flexible inputs?

Unrequested Features: Did it add methods or parameters you didn’t ask for?

Reviewing Gemini 3.0 Code

When reviewing code from Gemini 3.0, look for:

Missing Safeguards: Did it skip input validation on critical public methods?

Edge Cases: Did it handle nulls, empty arrays, or network failures?

Documentation: Did it skip comments or type definitions that would help future maintainers?

Skipped Requirements: Did it implement all the requirements, or just the obvious ones?

Testing Implications

Since GPT-5.1 adds unrequested validation logic (like checking for negative tokens in Test 1), you must verify that these checks match your actual business rules. Claude Opus 4.5 tends to implement everything requested (and more), so verify that all the extra features align with your needs. With Gemini 3.0, the generated code does only what you asked, so you don’t need to audit extra logic. However, you do need to manually add any safety checks or requirements it skipped.

Prompting Strategy

With Claude Opus 4.5: If you want minimal code, explicitly say so. Otherwise, expect full implementations with proper error handling, environment variables, and organized sections.

With GPT-5.1: If you need a specific, minimal implementation, explicitly tell it what not to do (e.g., “Do not add extra validation,” “Keep the implementation minimal”).

With Gemini 3.0: If you need production-ready code, explicitly ask for the “extras” (e.g., “Include JSDoc comments,” “Handle edge cases,” “Add input validation,” “Implement all 10 requirements”).

Verdict

All three models are capable of handling complex coding tasks. Each has a distinct style that makes it better suited for different use cases.

Claude Opus 4.5 produces code that is comprehensive, organized, and production-ready. It was the fastest in our tests (7 minutes total) while scoring highest (98.7% average). It implements all requirements, including ones that other models miss, and adds features like environment variables, rate limiting, and runtime configuration automatically.

GPT-5.1 produces code that is thorough, defensive, and well-documented. It tends to reason for longer before outputting code, and includes safeguards and documentation automatically, often anticipating needs beyond the explicit requirements.

Gemini 3.0 produces code that is exact, efficient, and minimal. It is the cheapest option ($1.10 total) and implements exactly what is specified in the prompt, without adding unrequested features or safeguards.

Choose based on your needs:

If you want complete implementations with all requirements met on the first try, Claude Opus 4.5 fits best.

If you want defensive code with built-in safety and backward compatibility, GPT-5.1 fits best.

If you want simple, precise code that matches your specs exactly at the lowest cost, Gemini 3.0 fits best.

Developers now have multiple strong models that can handle hard tasks. Your choice depends on which trade-offs matter most: completeness (Claude Opus), defensiveness (GPT), or precision (Gemini).

Love these comparisons. It helps us decide which agent to use for what. This will save me tons of $. Thank you, keep em coming 😍

Thank you for the tests, they help a lot! Do you mind sharing the prompts you used in this article?