The Year Senior Engineers Stopped Rolling Their Eyes at AI Coding

Maybe 2025 isn't the year of the Linux Desktop, but I think it could be looked as the year of mainstream AI coding in retrospect

As 2025 ends, something remarkable has happened. The same senior engineers who dismissed AI coding tools as “fancy autocomplete” in early 2025 are now shipping production code they’ve never read—and bragging about it. At the stroke of midnight in San Francisco, it’s worth taking stock of how fast this turned.

I’ve tracked this shift since joining Kilo Code in March. Back then, AI articles on Hacker News were reliably downvoted with choruses of “it’s just hype” or “slop.” Today, over a third of top HN stories have an AI angle. The message from millennial and older engineers is clear: AI is here to stay and ready for the mainstream.

Changing Voices, Changing Hearts

Sure, I live in my own Twitter bubble. But when people I’ve followed for years all say variations of the same thing, it’s hard to dismiss.

Peter Steinberger, who built PSPDFKit and has shipped production code for over a decade, wrote in “Shipping at Inference-Speed“:

“The amount of software I can create is now mostly limited by inference time and hard thinking. And let’s be honest—most software does not require hard thinking.”

And this confession:

“I don’t read much code anymore. I watch the stream and sometimes look at key parts, but I gotta be honest—most code I don’t read.”

This is someone who’s been in the trenches of iOS development for years. Not a hype-man or an AI founder. This is a practitioner calling it as he sees it.

Boris Cherny, yes creator of Claude Code, but also a very senior engineer (and writer of books on TypeScript):

“A year ago, Claude struggled to generate bash commands without escaping issues. Fast forward to today. In the last thirty days, I landed 259 PRs—497 commits, 40k lines added, 38k lines removed. Every single line was written by Claude Code + Opus 4.5.”

Every. Single. Line. From someone who builds the tool.

What Actually Changed

The difference between early 2025 and now isn’t just model improvements. The tools crossed a threshold where senior engineers trust them enough to change their entire workflow.

Armin Ronacher, creator of Flask, captured this perfectly:

“The puzzle is still there. What’s gone is the labor. I never enjoyed hitting keys, writing minimal repro cases with little insight, digging through debug logs, or trying to decipher some obscure AWS IAM permission error. That work wasn’t the puzzle for me. It was just friction, laborious and frustrating. The thinking remains; the hitting of the keys and the frustrating is what’s been removed.”

The key insight: We’re not outsourcing thinking to AI. We’re outsourcing the frustrating key-hitting.

Liz Fong-Jones frames it differently:

“In essence a language model changes you from a programmer who writes lines of code, to a programmer that manages the context the model has access to, prunes irrelevant things, adds useful material to context, and writes detailed specifications. If that doesn’t sound fun to you, you won’t enjoy it.”

She compares it to managing a junior developer who’s read every textbook but has zero practical experience with your codebase—and forgets anything older than an hour. Your job becomes managing that context effectively.

The Question of Quality

“But is the code any good?” That’s the question I hear most.

Mitchell Hashimoto, co-founder of HashiCorp, shared something fascinating. Yes, 95%+ of bug reports are AI-generated garbage. But there’s a flip side:

“Slop drives me crazy and it feels like 95+% of bug reports, but man, AI code analysis is getting really good. There are users out there reporting bugs that don’t know ANYTHING about our stack, but are great AI drivers and producing some high quality issue reports.”

He describes a contributor experiencing Ghostty crashes who used AI to write a Python script to decode crash files, match them with dsym files, analyze the codebase, and fix four real crashing bugs.

The person didn’t know Zig, macOS dev, or terminals. But they drove the AI with expert skill.

“They didn’t just toss slop up on our repo. They came to Discord as a human, reached out as a human, and talked to other humans about what they’ve done. They were careful and thoughtful about the process.”

The tools amplify what you bring to them.

Not Changed: The Hard Part (sorry not sorry)

Jason Gorman - with 25 years of experience and created the word “codesmanship” -wrote something that’s been bouncing around my head:

“The hard part of computer programming isn’t expressing what we want the machine to do in code. The hard part is turning human thinking—with all its wooliness and ambiguity and contradictions—into computational thinking that is logically precise and unambiguous, and that can then be expressed formally in the syntax of a programming language.”

This was true when programmers punched cards. It was true for COBOL. Visual Basic. And it’s true for prompting LLMs.

“The hard part has always been—and likely will continue to be for many years to come—knowing exactly what to ask for.”

This is why “vibe coding” works for some and produces garbage for others. The people succeeding aren’t the ones with the best prompts—they’re the ones who understand the problem deeply enough to know when the AI got it right.

The future of software development is software developers

Where This Leaves Us

Simon Willison (Django fame) published something every developer needs to read:

“In all of the debates about the value of AI-assistance in software development there’s one depressing anecdote that I keep on seeing: the junior engineer, empowered by some class of LLM tool, who deposits giant, untested PRs on their coworkers—or open source maintainers—and expects the ‘code review’ process to handle the rest.”

“This is rude, a waste of other people’s time, and is honestly a dereliction of duty as a software developer.”

The tool doesn’t change the job. The job is still to ship working code. What’s changing: writing code increasingly means steering the ship, getting a “junior developer” to write the code, reviewing it, and proving it works.

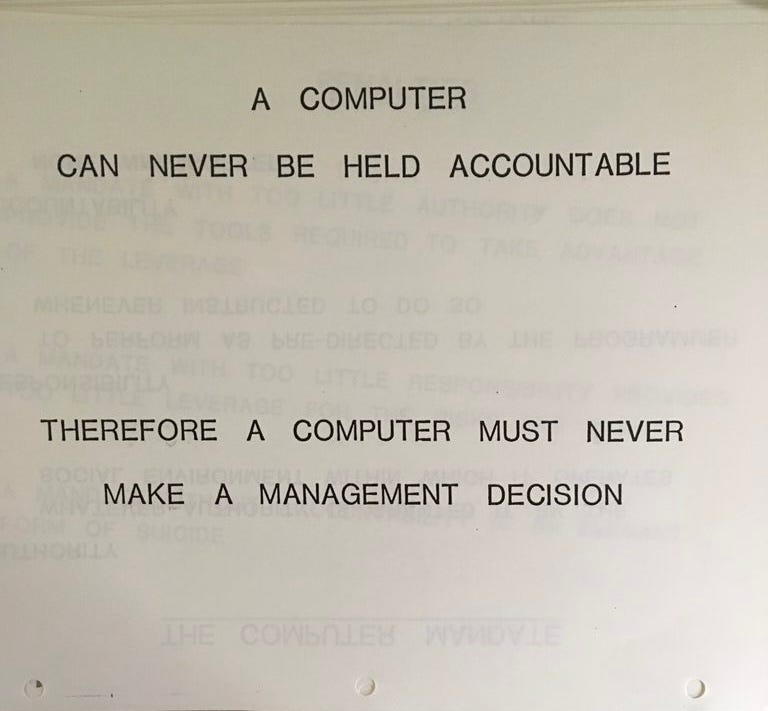

“A computer can never be held accountable. That’s your job as the human in the loop.”

IBM training manual (1979) and Simon Willison (2025)

What I’ve Learned

Senior and Staff+ engineers shipping with AI every day aren’t saying AI is replacing them. What they are saying:

The tools work now. Not perfectly. Not replacing thinking. But well enough that ignoring them is a competitive disadvantage.

The new skill set matters. Managing context, writing specs, knowing what to ask…these are the new vim shortcuts.

The fundamentals still apply. Testing, verification, architectural thinking, understanding your problem domain. If anything, these matter more.

Quality depends on the driver. Same tools, different hands, wildly different results. The difference isn’t just statistics…human judgment still matters.

Theo of t3.chat fame summed up where many developers are landing:

“If you think AI coding is a fad, I get it…If you tried these tools out during the Copilot autocomplete era, I understand entirely why you wrote them off.

all of the best devs I know are using AI heavily…once you see what these tools can do, it’s hard to talk about it genuinely without sounding like a paid shill.”People who insist these tools are useless - I get it. They felt useless just a few months ago. Your takes are dated. AI is fundamentally changing how we work. It won’t replace us, but it is already writing the majority of code being created every day.”

I wouldn’t have written this post eighteen months ago. The evidence wasn’t there. Crazy claims of “no more software engineers in 6 months” made it easy to write off AI completely. It’s still hard for me to write this post given that I work at an AI coding company.

But one way or another, as 2025 ends, the people I respect most in this industry have all independently arrived at the same place: this is real, and it’s worth paying attention to.

The ball is dropping. The tools are ready. Happy New Year—2026 is going to be interesting.

It is easier to write this post - even at an AI company - given that if you want to try agentic AI coding yourself, Kilo Code is open source and has completely free options. We focus on transparency—you can see exactly what the AI is doing and why. No black boxes.

Senior Engineers will become Senior Intent Verification Engineers.

i can tell you that it's mostly rejected and treated with skeptical dismissal. I have Developed with AI assisted coding a stack to help use selfhosted AI. which i have done. Trying to share the ability has been a terrible experience. i can selfhost with 4gb ram and no gpu. only a 14b but when you tell someone its AI assisted might as well slap them in the face lol.