Beyond Vibe Coding: The Art and Science of Prompt and Context Engineering

Lessons Learned Scaling AI Agents Without Losing Control

If you give an AI a simple instruction, it returns a masterpiece. Given a complex instruction, it turns into a literal, pedantic junior developer—one who has read all your documentation but lacks a single ounce of common sense.

LLMs aren’t magic, or mind readers. If you leave gaps in your instructions, the AI will fill them—and usually not the way you intended.

To build production-grade software at Kilo Speed, you need to master two pillars of Agentic Engineering: Professional Prompting and Context Engineering.

Pillar 1: Professional Prompting (Specificity Over Vibes)

The biggest mistake you can make is relying on pure “vibe coding”—one-shot prompts that lack constraints. To get deterministic results, you need to treat your prompt like a technical spec, not a suggestion.

The Research → Plan → Implement Framework

At Kilo, we swear by this structure that forces deliberate thinking before a single line of code is generated:

Research: Have the AI explore the codebase and summarize its understanding.

Plan: Create a step-by-step implementation plan, including specific files and snippets.

Implement: Only after you, the human, have reviewed the research and the plan do you move to execution.

Why this works: Human review at the research and planning checkpoints is your highest-leverage activity. Catching a misunderstanding during the planning phase is 10x cheaper than debugging a cascading error in 500 lines of generated code.

Tactical Prompting Techniques

Role assignment: Tell the AI to act as a “Senior Security Engineer” or a “Vue.js Specialist”. This guides the model toward a specific part of its latent space.

Rubber ducking: Use the agent to challenge your own assumptions. Ask, “What are the risks of this architectural choice?”

Meta-prompting: Give explicit instructions about the output format (e.g., “Return only the JSON schema, no conversational filler”).

Pillar 2: Context Engineering (And Why Better Prompts Aren’t Enough)

You can write the perfect prompt, but if the “RAM” of the AI is cluttered, it will still fail. This is where Context Engineering comes in.

Your AI’s context window—the amount of information it can reason against at once—is your most precious resource. Even if a model has a “million-token window”, performance often degrades once it’s only 40-50% full.

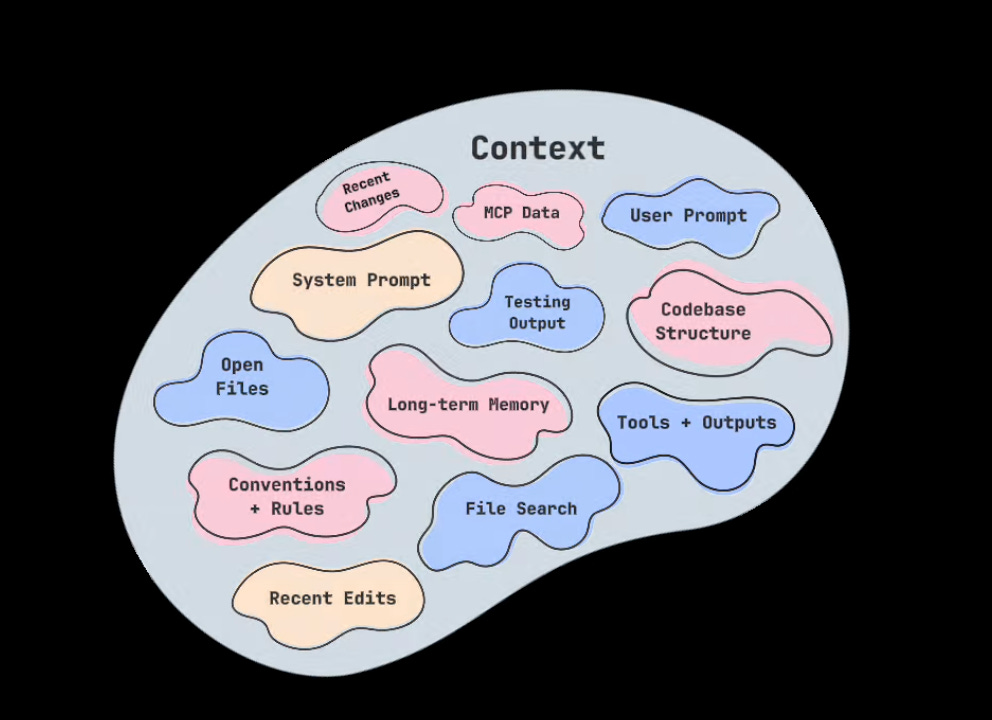

What ends up in the context?

System prompt

User prompt

Your codebase structure

Current file and dependencies

Recent changes/history

Project conventions and rules

Available tools and their outputs

Built-in long-term memory

Searching for files, understanding of the code flow, any edits we’ve made, any output from building/testing, any MCP data

The Four Context Failures

To engineer better context, you have to avoid these four traps:

Context poisoning: When a hallucination early in the chat gets referenced repeatedly, causing the AI to “believe” its own lies.

Context distraction: Including your entire 2,000-line file history when you only need to fix a single 10-line function.

Context confusion: Irrelevant tools or files (like React patterns in a Vue project) leaking into the reasoning.

Context clash: When old API versions and new ones are both in the window, and the AI picks the wrong one.

The Context Engineering Toolkit

How do we keep the context window “clean”? We use three main strategies:

1. Write and Select (The AGENTS.md approach)

Don’t let the AI guess what matters. Use persistent files like AGENTS.md to store long-term project context, conventions, and rules. You, the human, should decide which files are loaded into the “smart zone” of the context window.

2. Compress

Large error logs and long conversations drown out the goal. Ask the AI to summarize its progress into a todo.md or progress.md file. This “intentional compaction” keeps the window focused on the immediate task.

3. Isolate

When the context gets too “noisy”, start fresh. Use scratchpads or spin up a new agent for a specific sub-task. Only load the specific Model Context Protocol (MCP) tools you need for the job at hand.

The Golden Rule: Don’t Outsource Your Thinking

As Dex Horthy of humanlayer.ai says, “Don’t outsource your thinking.”

AI is an amplifier. It amplifies your engineering mindset (or lack thereof). By mastering the transition from “vibe coding” to intentional context engineering, you stop being a passenger and start being the architect.

—

Check out our new Learning Paths for Agentic Engineering - an open source repository of the best resources on agentic engineering in software development.

Thanks for this, we should never forget it: "The Golden Rule: Don’t Outsource Your Thinking"

The Kilo team is providing so much value to new coder's like me. I really enjoy your honest and upfront approach in telling the "whole" story around important topics and discussions in the coding community. I feel a true sense of honesty from your team, where you don't try to be overly verbose about ideas, trends or topics. BTW- your YouTube videos are incredible! Thank you for all that you do- Team Kilo rocks! 🤘🎸