1-Pizza Teams: Agentic Engineering & Team Size

The best agentic engineering teams are shrinking human team size

A director at a traditional company recently told Gergely Orosz something that stuck with me: “We’re starting to rename 2-pizza teams to 1-pizza teams. With AI, large teams just no longer make sense and slows things down.”

Amazon’s two-pizza rule has been engineering gospel for what, 20 years? If you need more than two pizzas to feed the team, the team is too big. Coordination costs eat you alive. Keep it small. But now the math is changing again: individual engineers with AI tools can now accomplish what used to require small teams.

Not Less People

I’ve seen too many companies use that framing to justify bad decisions. And many have even already reversed those decisions.

In reality, at the best organizations embracing agentic engineering, the team gets smaller not because you’re cutting people, but because each person’s output has grown. Individual engineers with AI tools can accomplish what used to require small teams. Each person’s output has grown, so teams naturally consolidate. Fewer people doing more, not fewer people doing the same.

The Research

Harvard and Wharton ran a field study at P&G that should make every engineering manager pay attention: individuals using AI performed as well as teams without it.

And teams with AI significantly outperformed teams without AI in producing top-tier ideas. One person with AI tools matched a traditional team’s output.

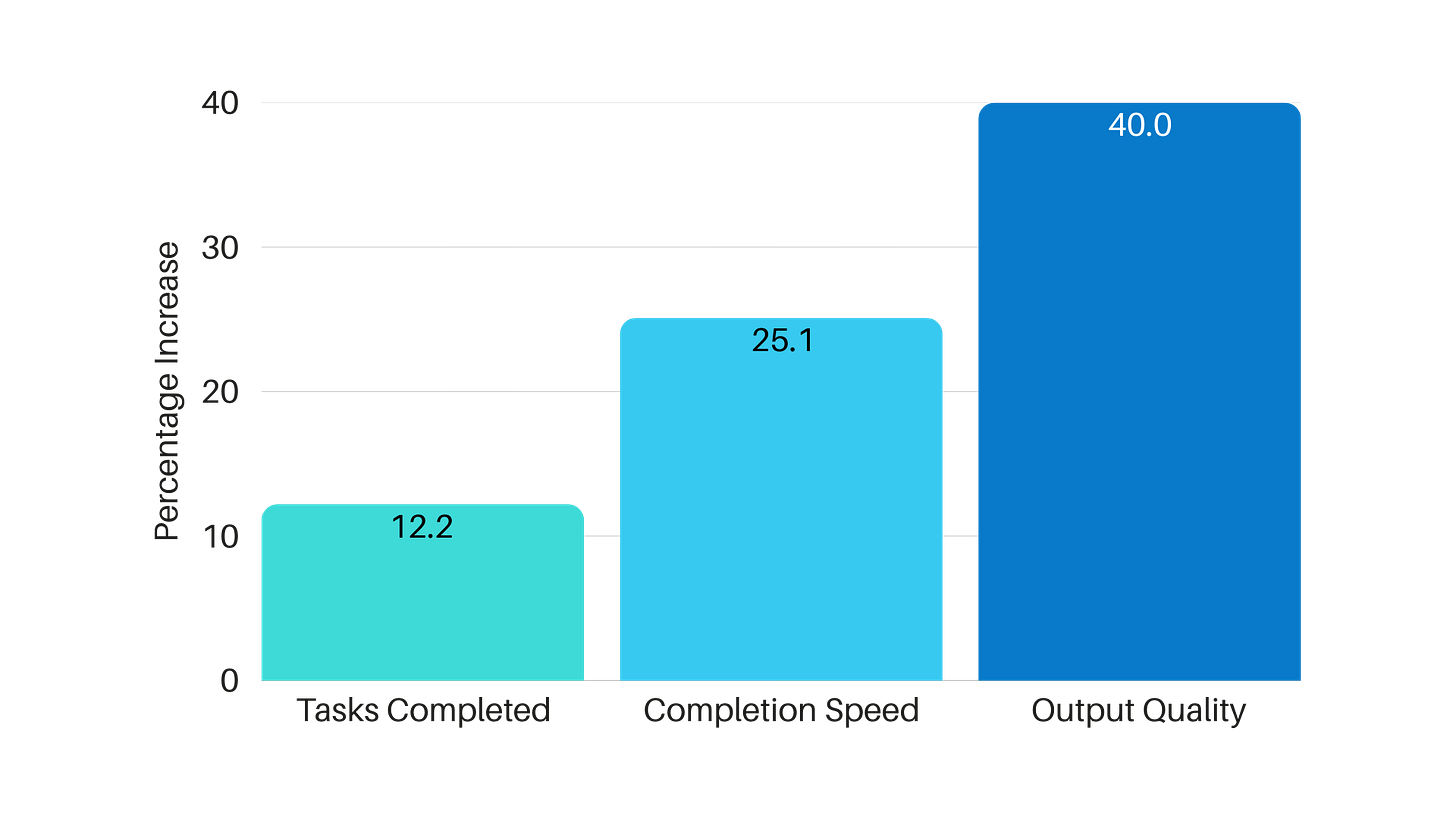

Anthropic also published their internal research recently, and the numbers are wild. Their engineers use Claude in 60% of their work now, reporting a 50% productivity boost. That’s a 2-3x increase from just a year ago.

27% of their Claude-assisted work is tasks that wouldn’t have been done otherwise. Engineers there are doing work that previously would have been too expensive in “person hours” to justify in addition to doing their existing work faster.

I’ve felt that myself too - I’m now writing scripts that I end up throwing away but that help me accomplish some data analysis or transformation that I probably would have no bothered with before. As code gets cheaper to produce, personalized software solutions become a lot easier to justify spending tokens on.

What “agent boss” actually means

Microsoft’s WorkLab coined this term “agent boss” and I kind of hate it but I also can’t think of an objectively better one. The idea is that everyone—from interns to executives—will manage their own constellation of AI agents.

It’s like extending the traditional engineering hierarchy into a new dimension.

Think about what a senior engineer actually does with AI tools now:

Decomposing work into agent-appropriate chunks

Reviewing agent output for quality and correctness

Orchestrating parallel workstreams across multiple agents/worktress

Making judgment calls the agents can’t handle

Maintaining context that agents lose between sessions

The job title stays “engineer” but the work looks more like... well, like being a manager. A manager of very fast, occasionally confused interns who never get tired.

The other day in an engineering meeting at Kilo, one of our engineers was asked about a new feature that was on the backlog. The answer? “Oh yeah I have an agent looking at that currently.” Nobody questioned it because that is the new normal for us and shipping at “Kilo Speed.”

The human-agent ratio

Microsoft is already talking about this as a new metric every leader will need: the human-agent ratio. How much of your output comes from direct human work vs. agent-assisted work? What’s the optimal balance for your specific work?

This will vary by task, process, and industry. I think that the balance is what matters here. Miss it one way and you leave AI’s value on the table; miss it the other and you drown your team in tool chaos.

And I don’t think anyone has figured out the right ratios yet. We’re all running experiments.

So what does this mean practically?

If you’re a team lead, the questions to ask yourself:

Where are you overstaffed for the AI era? A team of 8 doing what 4 people with good AI workflows could handle isn’t sustainable when your competitors figure this out. Think about what your existing team could accomplish with better leverage.

Are you measuring AI impact at all? Most teams aren’t. They’ve adopted tools but have no visibility into how those tools are being used or what output they’re generating.

What work aren’t you doing that you could? That 27% finding from Anthropic is worth sitting with for a second. If over a quarter of AI-assisted work is stuff that wouldn’t have happened otherwise, there’s a whole category of valuable work you might not be doing because it seemed too expensive.

Trade-offs

InsideAI’s analysis argues that AI actually makes small autonomous teams more important, not less. When individual contributors can have outsized impact through AI leverage, the coordination overhead of large teams becomes even more costly.

Engineers can accomplish a lot more individually—which forces organizations built around the old math to rethink structure.

The teams that figure this out first will have a real advantage. The ones that don’t will be overstaffed and slow, wondering what happened

Related: I wrote about the AI native economics for more on what AI-native engineering orgs look like.